The 1000x Lab Initiative First Congress in Austin brought together leaders in industry, academia, and government to explore new ways to accelerate materials R&D. Modeled after the SciPy community, a primary goal of the initiative is to establish an analogous community developing and sharing open source tools for fast, inexpensive, and scalable materials measurements. Above, Mark Simon, R&D Director at Saint-Gobain, delivers an industry keynote presentation.

The 1000x Lab: Accelerating Materials Science and Chemistry R&D

Authors: Eric Jones, Ph.D., CEO, Michael Heiber, Ph.D., Application Engineer, Colin McNeece, Ph.D., Director, Digital Transformation Services, Amir Khalighi, Ph.D., Manager, Digital Transformation Services, Roger Bonnecaze

Industry R&D Motivations

Materials science and chemistry laboratories gain significant advantage by moving from limited, expensive experiments yielding a small amount of data (most often the situation today), to large numbers of lower cost experiments yielding orders of magnitude more data. This advantage comes through increased knowledge, efficiency of expert time, and greater lab throughput. This is the idea behind The 1000x Lab initiative.

Increasingly, leaders in the materials and chemistry industries seek to leverage data-driven models (AI and ML) and calibrated simulations to accelerate product development at lower costs. They imagine using these methods to replace expensive laboratory measurements. The vision is good, but the strategy is at risk of undermining the ultimate goal.

Building data-driven models and eliminating experiments are often mutually exclusive in scientific discovery. The strength of data-driven models is proportional to the amount and quality of data that they are trained on, and experiments are where the data are produced. For data-driven methods to have the most business impact, labs must significantly reduce the cost per data point.

This vision of data-driven R&D relies on a significant change to the status-quo in many laboratories. There are shining exceptions, but in many industrial specialty chemical labs, we have consistently seen opportunities to increase the lab throughput by 10x, 100x, or even 1000x with lower costs per sample by redesigning experimental workflows to utilize automation and other digital tools.

However, these ideas are often perceived as too expensive or impractical, the change too radical. This hesitation is not surprising. The majority of scientists have spent their careers in labs designed around and reliant on labor intensive human interaction. Realizing the lab of the future will require that gaps in skills and imagination are addressed, and most importantly, examples of what is possible are provided.

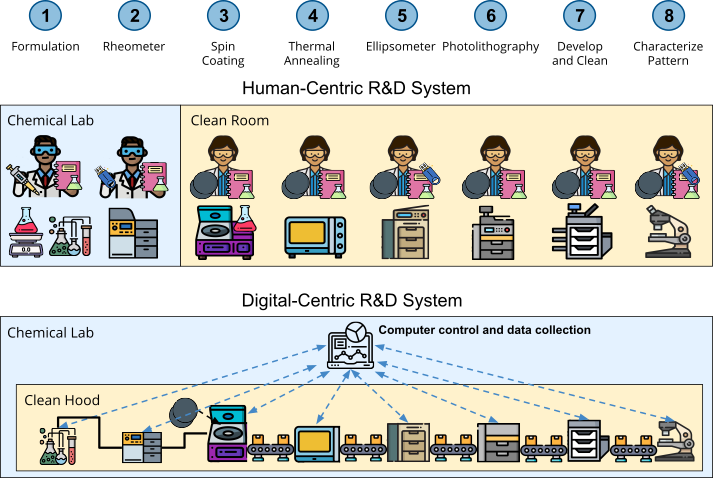

Figure 1: There is a major need to rethink traditional, human-centric R&D systems. In this example, humans manually perform fabrication and characterization tasks, move samples between physical workstations around the lab, and record/save data in lab notebooks or on USB data drives. Instead, modular, digital-centric R&D systems execute the most common and time-consuming tasks so that expert scientists can spend more time on data analysis and decision making.

The Path to Accelerated Experimentation

To address this challenge, Enthought CEO Eric Jones and UT-Austin Prof. Roger Bonnecaze have created the The 1000x Lab initiative. The goal of this open innovation initiative is to establish a community of practice for people developing tools and methodologies, and to provide examples that can help scientists embrace transformative change in their materials science and chemistry laboratories.

Modeled after the SciPy open-source scientific Python community that Enthought CEO Eric Jones founded in 2002, the initial goal is to establish an engaged community developing and sharing open source tools for fast, inexpensive, scalable science, focused on materials processing and characterization. We believe that the necessary lab design shifts to deliver 1000x lab performance can be facilitated through the following principles:

- Bring measurements to materials: Design experiments to incorporate miniaturized, modularized measurement devices in the material fabrication and testing pipeline, rather than taking the materials out for external characterization, to gather more data faster.

- Miniaturize measurements: Often industrial standards require large amounts of material for testing. In many cases, correlative results may be possible with much smaller sample sizes, thereby lowering the cost to test each candidate material.

- Utilize appropriate technology: Often measurements are done with very expensive instruments when much simpler, less expensive equipment would do. This can enable automation in areas where it was previously considered to be too expensive.

- Standardize common processes: Best practices for automating common steps in sample preparation and handling are not well-established. For example, liquid and powder measurement and dosing are often a challenge that stops lab automation in its tracks. Using the above, establish methods and design equipment as building blocks for these common tasks to accelerate adoption of automated lab workflows.

- Facilitate data storage and use: Automation generates large quantities of valuable information. Managing this data so that it is available for analysis (contextualized, searchable, easily retrieved) is critical for extracting value from automated lab systems.

To expand on the concept of “appropriate technologies”: It has never been easier to create customized, low cost components using digital design tools, simulation software, and 3D printing. While these concepts are quickly being embraced by the passionate hobbyist and in a number of manufacturing industries, the uptake in R&D labs is lagging.

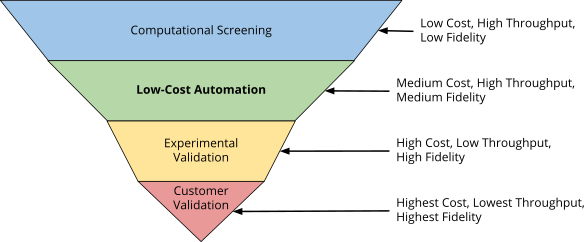

This lag is in part due to the false notion that every lab experiment must be of the highest quality, and reflect exactly what measurements a customer is requesting, for example to meet certified test standards. There will always be a need for high quality lab measurements, especially those that are reported for compliance or in a certificate of analysis. However, only a small fraction of materials that go through a modern lab need such scrutiny.

Figure 2: In a materials screening workflow, a new modality of low-cost, high-throughput sample fabrication and characterization can provide the data needed to filter out a majority of the candidates before slower, more expensive measurements are needed.

This shift towards open design may be viewed as a threat to the business of traditional equipment vendors, inhibiting progress. However, not all designs must be open, and there are opportunities for a new generation of lower cost high-throughput and digital-centric lab equipment.

It is the intent of The 1000x Lab initiative to create an environment where the needs of industry, academia, and government are well understood so that lab builders, instrument vendors, and other parts of the supply chain can understand how to work together to cover the range of quality and throughput needs. Foundational to this are:

- A shift from human-centric to digital-centric labs and experimental designs

- Support for well-documented physical and digital interfaces that conform to best practices

- Design for inter-compatibility between modules from different technology providers

Forming the Community

The 1000x Lab initiative held its first congress in Austin, TX in February 2020, bringing together a small group of leaders from industry, academia, and government. The objective was to create a vision for how to accelerate industrial materials and chemical R&D, and for the industry participants, how The 1000x Lab initiative can impact their businesses.

The 1000x Lab: First Congress participating companies and institutions:

- Procter & Gamble

- Saint-Gobain

- Bristol Myers Squibb

- Pepsico

- Tokyo Electron

- Mitsubishi Chemical

- Enthought

- University of Texas at Austin

- University of Delaware

- University of California-Davis

- Massachusetts Institute of Technology

- University of Illinois at Urbana-Champaign

- ESPCI Paris

- National Institute of Standards and Technology

There were an array of perspectives from industry representatives in personal care products, food and beverage, semiconductor manufacturing, pharmaceutical drug development, building materials, and specialty chemicals. This was augmented by perspectives from academia and government researchers, advocating for proactive investment and innovation in materials measurement technologies.

First Congress Takeaway Messages:

- Everyone has data organization challenges.

- The pharmaceutical industry leads in lab automation and could demonstrate value and show what is possible.

- There is a need for fast, inexpensive proxy measurements for early candidate screening.

- Industry researchers are concerned about loss of quality, reliability, support with DIY equipment, pointing to an opportunity for equipment manufacturers to get involved.

- Industry R&D labs may not have budgets or appetite to take risk on transformative workflows, highlighting a need to quantify the value of such initiatives.

- There is a need for a functional industry relevant example of a digitally-centric R&D system to demonstrate value and lower the perception of risk.

Figure 3: The 1000x Lab: First Congress group picture.

The Path Forward

Despite the constraints imposed by the COVID-19 crisis, the initiative continues to progress. The scientific Python software community has been engaged through a virtual presentation at the SciPy 2020 annual conference, setting the stage to expand the reach of the community when conditions allow. The COVID-19 crisis itself further highlights the need for digitally-centric labs as many researchers have been forced to stay away from crowded human-driven labs. Having digital-centric labs can enable R&D scientists and technicians to continue their valuable work remotely, albeit with some constraints.

From the first congress, it became clear that a convincing example of a digital-centric R&D system is needed to generate greater industry buy-in. With this in mind, an exemplar low-cost polymer solution formulation, rheological characterization, and printing system is being developed to aid in rapid screening and optimization of electronic thin films.

To get involved with The 1000x Lab initiative and receive updates about future events, please contact us at info@1000xlab.com.

Learn more from Enthought. Working with a specialty chemicals partner, Enthought are designing low-cost optical imaging systems for the accelerated development of printed polymer films for display applications (see the SciPy 2020 conference presentation about this work here).

About the Authors

Eric Jones, Ph.D., Founder and CEO at Enthought, holds a Ph.D. and M.S. in electrical engineering from Duke University and a B.S.E. in mechanical engineering from Baylor University and is the founder and CEO of Enthought.

Michael Heiber, Ph.D., Manager, Materials Informatics at Enthought, holds a Ph.D. in polymer science from The University of Akron and a B.S. in materials science and engineering from the University of Illinois at Urbana-Champaign

Colin McNeece, Ph.D., holds a Ph.D. in geology from the University of Texas at Austin, a M.A. in earth and planetary sciences, and a B.A. in geology from the University of California, Berkeley.

Amir Khalighi, Ph.D., Manager, Digital Transformation Services at Enthought, holds a Ph.D. and a M.S.E in mechanical engineering from The University of Texas at Austin and a B.S in mechanical engineering from the Sharif University of Technology.

Roger Bonnecaze holds a Ph.D. and M.S. from California Institute of Technology and a B.S. from Cornell University, all in chemical engineering, and is currently the William and Bettye Nowlin Chair Professor of Chemical Engineering at University of Texas at Austin.

Related Content

Reshaping Materials R&D: Navigating Margin Pressure in the Specialty Chemicals Industry

The era of the AI Co-Scientist is here. How is your organization preparing?

The Emergence of the AI Co-Scientist

The era of the AI Co-Scientist is here. How is your organization preparing?

Understanding Surrogate Models in Scientific R&D

Surrogate models are reshaping R&D by making research faster, more cost-effective, and more sustainable.

R&D Innovation in 2025

As we step into 2025, R&D organizations are bracing for another year of rapid-pace, transformative shifts.

Revolutionizing Materials R&D with “AI Supermodels”

Learn how AI Supermodels are allowing for faster, more accurate predictions with far fewer data points.

What to Look for in a Technology Partner for R&D

In today’s competitive R&D landscape, selecting the right technology partner is one of the most critical decisions your organization can make.

Digital Transformation vs. Digital Enhancement: A Starting Decision Framework for Technology Initiatives in R&D

Leveraging advanced technology like generative AI through digital transformation (not digital enhancement) is how to get the biggest returns in scientific R&D.

Digital Transformation in Practice

There is much more to digital transformation than technology, and a holistic strategy is crucial for the journey.

Leveraging AI for More Efficient Research in BioPharma

In the rapidly-evolving landscape of drug discovery and development, traditional approaches to R&D in biopharma are no longer sufficient. Artificial intelligence (AI) continues to be a...

Utilizing LLMs Today in Industrial Materials and Chemical R&D

Leveraging large language models (LLMs) in materials science and chemical R&D isn't just a speculative venture for some AI future. There are two primary use...