In today's dynamic landscape of AI, it’s critical that R&D leaders and scientists are equipped with a working understanding of key AI concepts so they can more effectively develop future-forward data strategies for their companies and lead the charge towards groundbreaking discoveries. The R&D scientist of the (very near) future will be required to know how to leverage AI, in addition to their domain expertise.

Enthought partners with science-driven companies all over the world to optimize and automate their complex R&D data systems. Below are 10 AI concepts that we feel every scientific R&D leader should start with to help build up their knowledge.

TL;DR: Machine Learning, Neural Network, Deep Learning, Large Language Model, Reinforcement Learning, Computer Vision, Quantum Computing, Explainable AI, Agentic AI, and Artificial General Intelligence.

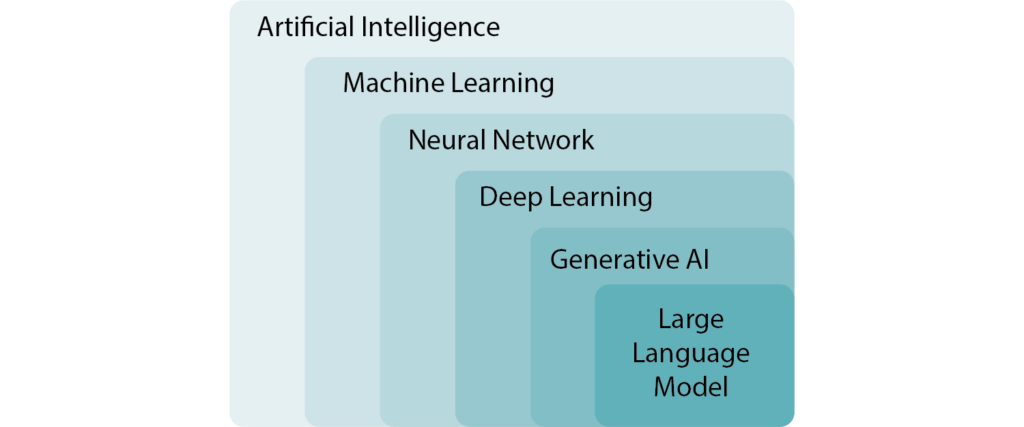

#1) MACHINE LEARNING | Machine learning (ML) is a subset of AI that focuses on developing algorithms that enable computers to perform tasks by learning from data rather than through explicit programming. Through iterative learning, ML algorithms identify underlying structures, patterns, and anomalies to make data-driven predictions and decisions. In the life sciences, machine learning algorithms can sift through extensive genomic sequences to identify markers for diseases or predict patient responses to treatments. ML can also significantly accelerate the discovery and analysis of new materials, predicting their properties and behaviors under various conditions.

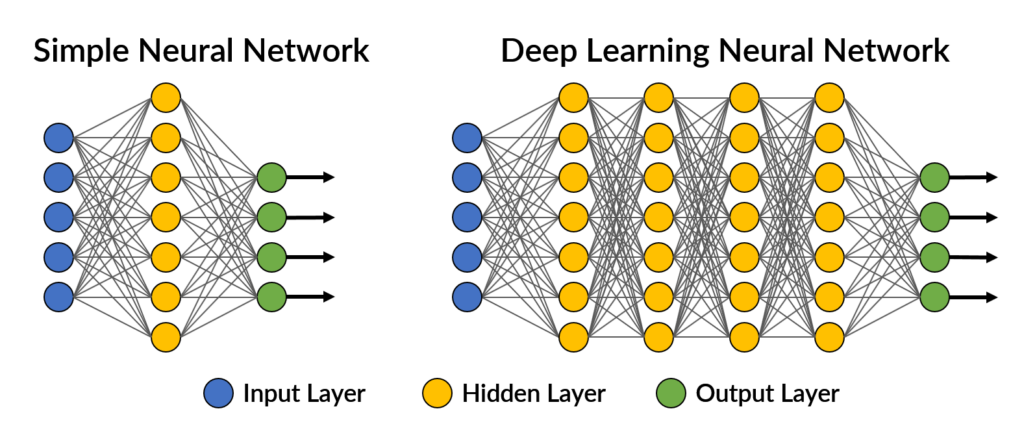

#2) NEURAL NETWORK | Neural networks are distributed networks of computing elements that mimic the operations of a human brain to recognize relationships in a set of data. These elements are structured in layers: an input layer, one or more hidden layers, and an output layer. Each layer is made up of nodes, or "neurons," which are interconnected and transmit signals to each other. The strength of these connections, or weights, is adjusted during the training process as the neural network learns from the data. This architecture allows neural networks to model complex patterns and dynamics in data by adjusting these weights based on the input they receive, making them particularly effective for tasks that involve high-dimensional data and complex interactions between variables, such as image and speech recognition, natural language processing, and sophisticated pattern recognition.

#3) DEEP LEARNING | Deep learning is a subset of machine learning that employs neural networks with many layers (hence "deep"). Deep learning is distinguished by its ability to learn and make intelligent decisions from vast amounts of data, far surpassing traditional ML techniques in complexity and performance. You’ve experienced deep learning in action every time you watch Netflix. In the sciences, deep learning has catalyzed remarkable innovations. For instance, in life sciences, it has revolutionized the prediction of protein structures (see Deepmind’s AlphaFold) as well as how we analyze genetic data, thereby considerably accelerating drug discovery and advancing personalized medicine. In chemistry, deep learning algorithms are being used to model complex chemical reactions, predict molecular behavior, and discover new compounds and catalysts.

Source: Machine and Deep Learning Approaches in Genome

#4) LARGE LANGUAGE MODEL | Large Language Models (LLMs) are advanced deep learning neural networks designed to understand, generate, and interact with human language at an unprecedented scale. These models are trained on extensive corpora of text data, encompassing a wide range of topics, languages, and styles, enabling them to represent the nuances, context, and complexities of human language. By leveraging deep learning architectures, particularly transformer models, LLMs can predict the next word in a sentence, generate coherent and contextually relevant text, and perform specific tasks like translation, summarization, and question answering with high levels of proficiency. In the sciences, LLMs can assist researchers with a growing number of tasks, like mining research papers and patents in seconds and minutes and democratizing access to knowledge.

#5) REINFORCEMENT LEARNING | Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by performing actions in an environment to achieve a goal. The agent is trained using feedback from its own actions and experiences in the form of rewards and penalties. Unlike supervised learning where training data is labeled with the correct answer, RL involves learning the best actions to take in various states within an environment. This approach has profound implications in fields such as robotics, where RL can be used to teach robots to perform complex tasks autonomously. For example, RL can automate the design of experiments by simulating the environment to identify the most promising paths for R&D, reducing the need for costly and time-consuming trial-and-error experiments.

#6) COMPUTER VISION | Computer vision is a field within AI that enables machines to interpret and make decisions based on visual data, like humans do with their eyesight. This technology employs algorithms to process, analyze, and understand images or videos, enabling a wide array of applications from facial recognition to autonomous vehicle navigation. Computer vision is also transforming the way data is analyzed and interpreted in multiple scientific domains. In healthcare, computer vision techniques are used for analyzing medical images, such as MRI scans or microscopy images, to identify disease markers, track cell behavior, and monitor the progression of diseases at a level of detail and speed unattainable by human experts. Computer vision can also aid in the characterization of materials by analyzing images to determine structural properties, identify defects, or monitor changes under different conditions.

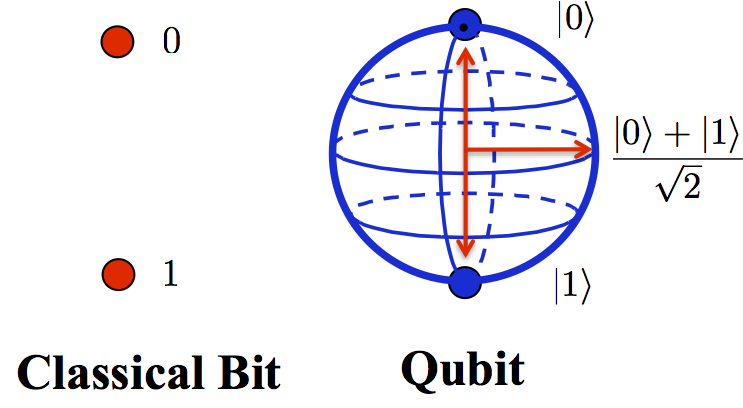

#7) QUANTUM COMPUTING | Quantum computing is a type of computation that utilizes the principles of quantum mechanics to process information faster than classical computers. Unlike classical computing bits, which can be either 0 or 1, quantum computing uses quantum bits, or qubits, which can represent and store information in both 0 and 1 simultaneously, thanks to superposition. Additionally, through entanglement, qubits can be correlated with each other in ways that classical bits cannot, enabling quantum computers to perform complex calculations at speeds unattainable by classical computers. This advanced computing power has profound implications for scientific research and development—or is expected to, in any event, when it comes to fruition. The field is still in its early stages and challenged with hardware and algorithm limitations. Despite these hurdles, the ongoing discussion and research into combining quantum computing with AI reflect a shared optimism about their potential to change the world.

Source: Quantum Computing at the Frontiers of Biological Sciences

#8) EXPLAINABLE AI | Explainable AI (XAI) refers to methods and techniques that make the outcomes of AI models transparent and understandable to humans. This concept addresses one of the major challenges in AI: the black-box nature of many deep learning models, where the decision-making process is often opaque, making it difficult to interpret how these models arrive at their conclusions. In fields such as life sciences, materials science, and chemistry, where decision-making has significant ethical, safety, and regulatory implications, XAI is essential. XAI plays a crucial role in advancing the adoption of AI across these scientific domains, ensuring that AI-driven decisions are both reliable and accountable.

#9) AGENTIC AI | Agentic AI systems are designed to perform tasks autonomously by making decisions and taking actions on behalf of users. Unlike passive AI, which requires explicit instructions for every action, agentic AI systems possess the capability to understand objectives, navigate complex environments, and execute tasks with minimal human intervention. Familiar AI agents include Siri, Alexa, Google Assistant, and the growing number of chatbots. Key to their functionality is the integration of reinforcement learning (RL) and decision theory, which allows agentic AI to evaluate outcomes, learn from interactions, and optimize over time. Agentic AI systems are not just reactive but proactive entities, capable of contributing to the acceleration of progress in a number of fields through autonomous operation and decision-making. As expected and warranted, there is much discussion about establishing the guardrails for agentic AI. Read Practices for Governing Agentic AI Systems for OpenAI’s newest thoughts on the topic.

#10) ARTIFICIAL GENERAL INTELLIGENCE | Artificial General Intelligence (AGI), or "strong AI," represents the currently theoretical version of AI that has cognitive abilities that mirrors or surpasses human intelligence. Unlike specialized or narrow AI, which excels in specific tasks, AGI embodies a comprehensive suite of intellectual capabilities, including reasoning, problem-solving, perception, and learning, across diverse domains with little to no human guidance. For R&D, the implications of achieving AGI are profound. For instance, in life sciences, AGI could autonomously analyze genetic data, integrate disparate sources of medical information, and devise personalized treatment plans. However, achieving AGI clearly involves overcoming substantial challenges including the tremendous ethical considerations, creation of more advanced learning algorithms, as well as development of the hardware to keep up. The debate around AGI is ongoing and ranges from whether it’s even possible to whether it’s something to try to achieve, or prevent.

Enthought partners with science-driven companies all over the world to optimize and automate their complex R&D data systems, leverage AI and other advanced technologies. Contact us to discuss how we can help your R&D organization.

Related Content

Digital Transformation vs. Digital Enhancement: A Starting Decision Framework for Technology Initiatives in R&D

Leveraging advanced technology like generative AI through digital transformation (not digital enhancement) is how to get the biggest returns in scientific R&D.

Digital Transformation in Practice

There is much more to digital transformation than technology, and a holistic strategy is crucial for the journey.

Leveraging AI for More Efficient Research in BioPharma

In the rapidly-evolving landscape of drug discovery and development, traditional approaches to R&D in biopharma are no longer sufficient. Artificial intelligence (AI) continues to be a...

Utilizing LLMs Today in Industrial Materials and Chemical R&D

Leveraging large language models (LLMs) in materials science and chemical R&D isn't just a speculative venture for some AI future. There are two primary use...

Top 10 AI Concepts Every Scientific R&D Leader Should Know

R&D leaders and scientists need a working understanding of key AI concepts so they can more effectively develop future-forward data strategies and lead the charge...

Why A Data Fabric is Essential for Modern R&D

Scattered and siloed data is one of the top challenges slowing down scientific discovery and innovation today. What every R&D organization needs is a data...

Jupyter AI Magics Are Not ✨Magic✨

It doesn’t take ✨magic✨ to integrate ChatGPT into your Jupyter workflow. Integrating ChatGPT into your Jupyter workflow doesn’t have to be magic. New tools are…

Top 5 Takeaways from the American Chemical Society (ACS) 2023 Fall Meeting: R&D Data, Generative AI and More

By Mike Heiber, Ph.D., Materials Informatics Manager Enthought, Materials Science Solutions The American Chemical Society (ACS) is a premier scientific organization with members all over…

Real Scientists Make Their Own Tools

There’s a long history of scientists who built new tools to enable their discoveries. Tycho Brahe built a quadrant that allowed him to observe the…

How IT Contributes to Successful Science

With the increasing importance of AI and machine learning in science and engineering, it is critical that the leadership of R&D and IT groups at...