Scientists gain superpowers when they learn to program. Programming makes answering whole classes of questions easy and new classes of questions become possible to answer.

Scientists gain superpowers when they learn to program. Programming makes answering whole classes of questions easy and new classes of questions become possible to answer.

If you have some programming experience, large language models (LLMs) can raise the ceiling of your performance and productivity. Using LLMs to write code turns a challenging recall task (What’s this function call? How does this API work?) into a much easier recognition task (Yup, that looks good” or That looks fishy.) This post will discuss 7 ways LLMs can help you write, understand, and document code.

New LLMs come out nearly every week (at least, that’s how it feels), but we’ll show some of the simplest things that work using ChatGPT. We’re using ChatGPT because it’s flexible and performs well, even though it requires copy–pasting code and prompts between your editor and the web interface. The purpose is to expand your horizon about what you can do with these models.

Write Code for Popular Packages

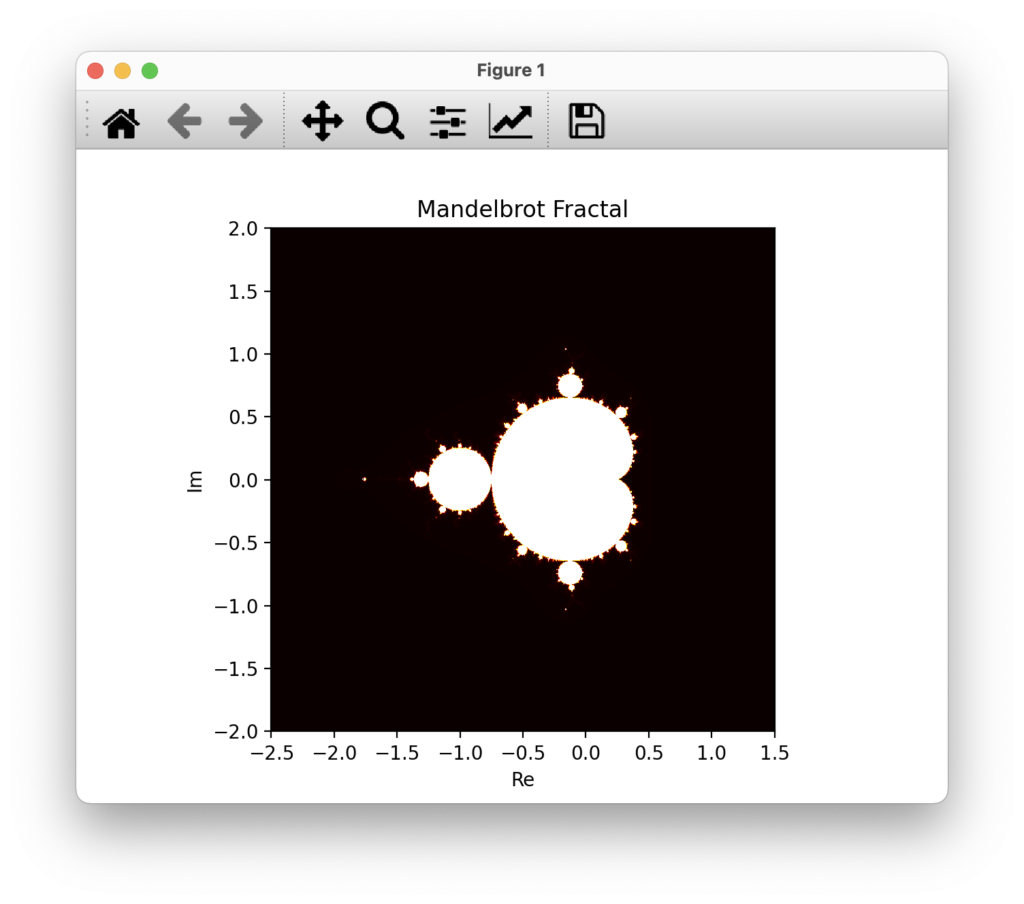

LLMs are trained against terabytes of source code. As a result, they’re familiar with popular packages and can write decent code based on an English specification. Just tell them what you want. They might do surprisingly well.

Write a Python function to calculate the mandelbrot fractal using NumPy and plot it.

Returns:

import numpy as np

import matplotlib.pyplot as plt

def mandelbrot(width, height, xmin, xmax, ymin, ymax, max_iter):

x = np.linspace(xmin, xmax, width)

y = np.linspace(ymin, ymax, height)

X, Y = np.meshgrid(x, y)

C = X + 1j * Y

Z = np.zeros_like(C)

img = np.zeros((height, width))

for i in range(max_iter):

mask = np.abs(Z) < 2

Z[mask] = Z[mask] * Z[mask] + C[mask]

img += mask

plt.imshow(img, cmap='hot', extent=(xmin, xmax, ymin, ymax))

plt.title("Mandelbrot Fractal")

plt.xlabel("Re")

plt.ylabel("Im")

plt.show()

mandelbrot(width=800, height=600, xmin=-2.5, xmax=1.5, ymin=-2, ymax=2, max_iter=1000)

And the figure looks like this:

Write Code for New Packages

Despite the size of their pretraining dataset, LLMs have a few shortcomings. There are many obscure packages it has never seen and can’t write code for and with. They’ve also been trained once and haven’t seen the latest version of all packages. A growing collection of tools, like Sourcegraph’s Cody, aim to address this issue by analyzing codebases to provide the relevant context to the models. But you can also do the simplest thing possible, like in the video below.

I copied the documentation for an API (it could have been source code), asked the model to “Generate a Python wrapper for this API” and pasted the docs. I followed up with, “Write a Python cli to use that wrapper.” That’s it.

One challenge of this approach is the model’s context window length. That’s the maximum number of tokens (1,000 tokens is roughly 750 words) you can provide to the model. You quickly reach the limit with large chunks of documentation and code.

Use ChatGPT Instead of Writing Code

One potent use of LLM is to extract structured data from unstructured data. You can ask the model to do what you want instead of asking it to write code. It’s helpful to process small data sets or make a first pass on larger datasets. In the long term, writing code is better because it’s auditable, repeatable, and much cheaper to run at scale.

In this first example, I extract data from the Wikipedia article on Chemistry.

Extract only the names of chemical laws, as JSON list of strings.

######

Avogadro's law

Beer–Lambert law

Boyle's law (1662, relating pressure and volume)

Charles's law (1787, relating volume and temperature)

Fick's laws of diffusion

Gay-Lussac's law (1809, relating pressure and temperature)

Le Chatelier's principle

Henry's law

Hess's law

Law of conservation of energy leads to the important concepts of equilibrium, thermodynamics, and kinetics.

Law of conservation of mass continues to be conserved in isolated systems, even in modern physics. However, special relativity shows that due to mass–energy equivalence, whenever non-material "energy" (heat, light, kinetic energy) is removed from a non-isolated system, some mass will be lost with it. High energy losses result in loss of weighable amounts of mass, an important topic in nuclear chemistry.

Law of definite composition, although in many systems (notably biomacromolecules and minerals) the ratios tend to require large numbers, and are frequently represented as a fraction.

Law of multiple proportions

Raoult's law

Which results in:

["Avogadro's law", "Beer–Lambert law", "Boyle's law", "Charles's law", "Fick's laws of diffusion", "Gay-Lussac's law", "Le Chatelier's principle", "Henry's law", "Hess's law", "Law of conservation of energy", "Law of conservation of mass", "Law of definite composition", "Law of multiple proportions", "Raoult's law"]

Then I used what’s called “few-shot prompting” to teach the model the pattern that I’m looking for before giving it a list of entries to process:

Input: Actinium(III) chloride – AcCl3[1]

Output: {"name", "Actinium(III) chloride", "formula": "AcCl3"}

Input: Actinium(III) fluoride – AcF3[2]

Output: {"name", "Actinium(III) fluoride", "formula": "AcF3"}

Input: Actinium(III) oxide – Ac2O3[3]

Output: {"name", "Actinium(III) oxide", "formula": "Ac2O3"}

Input: Aluminium antimonide – AlSb[4][5]

Aluminium arsenate – AlAsO4[6]

Aluminium arsenide – AlAs[7][8]

Aluminium diboride – AlB2[9][10]

Aluminium bromide – AlBr3[11]

Aluminium carbide – Al4C3[12]

Aluminium iodide – AlI3[13]

Aluminium nitride – AlN[14]

Aluminium oxide – Al2O3[15]

Aluminium phosphide – AlP[16]

Aluminium chloride – AlCl3[17]

Aluminium fluoride – AlF3[18]

Which results in:

{"name": "Aluminium antimonide", "formula": "AlSb"}

{"name": "Aluminium arsenate", "formula": "AlAsO4"}

{"name": "Aluminium arsenide", "formula": "AlAs"}

{"name": "Aluminium diboride", "formula": "AlB2"}

{"name": "Aluminium bromide", "formula": "AlBr3"}

{"name": "Aluminium carbide", "formula": "Al4C3"}

{"name": "Aluminium iodide", "formula": "AlI3"}

{"name": "Aluminium nitride", "formula": "AlN"}

{"name": "Aluminium oxide", "formula": "Al2O3"}

{"name": "Aluminium phosphide", "formula": "AlP"}

{"name": "Aluminium chloride", "formula": "AlCl3"}

{"name": "Aluminium fluoride", "formula": "AlF3"}

Explain Code

This use case is straightforward: Ask the model to tell you what the code does:

Describe this code succinctly

####

x = np.linspace(xmin, xmax, width)

y = np.linspace(ymin, ymax, height)

X, Y = np.meshgrid(x, y)

C = X + 1j * Y

Z = np.zeros_like(C)

img = np.zeros((height, width))

for i in range(max_iter):

mask = np.abs(Z) < 2

Z[mask] = Z[mask] * Z[mask] + C[mask]

img += mask

Which results in the following (This was in a different session from when I generated the code above):

This code generates an image of the Mandelbrot set by iterating through a grid of complex numbers. It initializes arrays for the x and y coordinates, creates a meshgrid, and calculates the corresponding complex numbers. It then performs iterations using the Mandelbrot formula, updating the values of the complex numbers and keeping track of the iterations. Finally, it generates an image based on the number of iterations.

Document Code

Again, asking the model to do what you want is the simplest thing: “write a docstring”. There’s little context in the code below, yet the model understood that this code shifts a data east–west. (I’m pleasantly surprised by that, actually!)

Write docstring

####

def shift_grid(data, lon_shift):

i0 = np.argmin(np.fabs(lonsout-lon_shift))

i0_shift = len(lonsout)-i0

dataout = np.zeros(arrout.shape,arrout.dtype)

dataout[..., :i0_shift] = arrout[..., i0:]

dataout[..., i0_shift:] = arrout[..., 1:i0+1]

return dataout

Returns:

def shift_grid(data: np.ndarray, lon_shift: float) -> np.ndarray:

"""Shift a grid of data along the longitude axis.

Args:

data (np.ndarray): Input data grid.

lon_shift (float): Longitude shift value.

Returns:

np.ndarray: Shifted data grid.

Notes:

The function shifts the input data grid along the longitude axis. The shift is

determined by finding the longitude value closest to `lon_shift` in the array

`lonsout`. The data is wrapped around so that the shifted grid preserves the

periodicity of longitudes.

Example:

data = np.array([[1, 2, 3, 4],

[5, 6, 7, 8]])

lon_shift = 1.5

shifted_data = shift_grid(data, lon_shift)

# Output: np.array([[4, 1, 2, 3],

# [8, 5, 6, 7]])

"""

i0 = np.argmin(np.fabs(lonsout - lon_shift))

i0_shift = len(lonsout) - i0

dataout = np.zeros(arrout.shape, arrout.dtype)

dataout[..., :i0_shift] = arrout[..., i0:]

dataout[..., i0_shift:] = arrout[..., 1:i0 + 1]

return dataout

We can even ask it to use the Numdoc style instead without providing the code again:

Use the Numpydoc style

Returns:

def shift_grid(data: np.ndarray, lon_shift: float) -> np.ndarray:

"""

Shift a grid of data along the longitude axis.

Parameters

----------

data : np.ndarray

Input data grid.

lon_shift : float

Longitude shift value.

Returns

-------

np.ndarray

Shifted data grid.

Notes

-----

The function shifts the input data grid along the longitude axis. The shift is

determined by finding the longitude value closest to `lon_shift` in the array

`lonsout`. The data is wrapped around so that the shifted grid preserves the

periodicity of longitudes.

Examples

--------

>>> data = np.array([[1, 2, 3, 4],

... [5, 6, 7, 8]])

>>> lon_shift = 1.5

>>> shifted_data = shift_grid(data, lon_shift)

>>> shifted_data

array([[4, 1, 2, 3],

[8, 5, 6, 7]])

"""

i0 = np.argmin(np.fabs(lonsout - lon_shift))

i0_shift = len(lonsout) - i0

dataout = np.zeros(arrout.shape, arrout.dtype)

dataout[..., :i0_shift] = arrout[..., i0:]

dataout[..., i0_shift:] = arrout[..., 1:i0 + 1]

return dataout

Improve Code

You can ask ChatGPT to modify your code, add type hints, or do some refactorings. If you’re asking it to do refactorings, I hope you have tests to validate that the code’s behavior hasn’t changed. (You have tests, right? Right? If not, ask ChatGPT to “Write tests for this”!)

Add type hints to this:

#####

class Particle(object):

def __init__(self, mass, velocity):

self.mass = mass

self.velocity = velocity

def __add__(self, other):

if not isinstance(other, Particle):

return NotImplemented

mnew = self.mass + other.mass

vnew = (self.momentum() + other.momentum()) / mnew

return Particle(mnew, vnew)

def __repr__(self):

return f"Particle(mass={self.mass!r}, velocity={self.velocity!r})"

def energy(self):

return 0.5 * (self.mass * self.velocity**2)

def momentum(self):

return self.mass * self.velocity

Which returns:

class Particle:

def __init__(self, mass: float, velocity: float) -> None:

self.mass = mass

self.velocity = velocity

def __add__(self, other: "Particle") -> "Particle":

if not isinstance(other, Particle):

return NotImplemented

mnew = self.mass + other.mass

vnew = (self.momentum() + other.momentum()) / mnew

return Particle(mnew, vnew)

def __repr__(self) -> str:

return f"Particle(mass={self.mass!r}, velocity={self.velocity!r})"

def energy(self) -> float:

return 0.5 * (self.mass * self.velocity**2)

def momentum(self) -> float:

return self.mass * self.velocity

Write Better Commit and PR Messages

Commit messages in version control systems like Git are an essential communication tool between developers (and you and your future self). You can use ChatGPT to draft them.

Once you’ve stagged your stages in Git, copy the diff (you can use `git diff --cached | pbcopy` on macOS) and use the prompt below for a rough draft of the commit message. But remember that commit messages are most valuable when they explain the context of why a change is made. You’ll have to provide that. The model has no idea.

Write a commit message for this diff. Start with a 50-character title line plus a detailed body, if necessary.

#####

<DIFF>

Treat the Code Like Any Code You Find on the Internet

Using the LLMs to write code doesn’t absolve you from using proper software engineering practices, such as code review and testing. You shouldn’t expect or trust that the generated code works or does what you expect. Treat it like a (very quickly written) first draft that you must modify, refactor, and test.

To learn more about how ChatGPT and LLMs will affect R&D, check out our webinar, What Every R&D Leader Needs to Know About ChatGPT and LLMs.

__________________________________________

Author: Alexandre Chabot-Leclerc, Vice President, Digital Transformation Solutions, holds a Ph.D. in electrical engineering and a M.Sc. in acoustics engineering from the Technical University of Denmark and a B.Eng. in electrical engineering from the Université de Sherbrooke. He is passionate about transforming people and the work they do. He has taught the scientific Python stack and machine learning to hundreds of scientists, engineers, and analysts at the world’s largest corporations and national laboratories. After seven years in Denmark, Alexandre is totally sold on commuting by bicycle. If you have any free time you’d like to fill, ask him for a book, music, podcast, or restaurant recommendation.

Author: Alexandre Chabot-Leclerc, Vice President, Digital Transformation Solutions, holds a Ph.D. in electrical engineering and a M.Sc. in acoustics engineering from the Technical University of Denmark and a B.Eng. in electrical engineering from the Université de Sherbrooke. He is passionate about transforming people and the work they do. He has taught the scientific Python stack and machine learning to hundreds of scientists, engineers, and analysts at the world’s largest corporations and national laboratories. After seven years in Denmark, Alexandre is totally sold on commuting by bicycle. If you have any free time you’d like to fill, ask him for a book, music, podcast, or restaurant recommendation.

Related Content

Digital Transformation vs. Digital Enhancement: A Starting Decision Framework for Technology Initiatives in R&D

Leveraging advanced technology like generative AI through digital transformation (not digital enhancement) is how to get the biggest returns in scientific R&D.

Digital Transformation in Practice

There is much more to digital transformation than technology, and a holistic strategy is crucial for the journey.

Leveraging AI for More Efficient Research in BioPharma

In the rapidly-evolving landscape of drug discovery and development, traditional approaches to R&D in biopharma are no longer sufficient. Artificial intelligence (AI) continues to be a...

Utilizing LLMs Today in Industrial Materials and Chemical R&D

Leveraging large language models (LLMs) in materials science and chemical R&D isn't just a speculative venture for some AI future. There are two primary use...

Top 10 AI Concepts Every Scientific R&D Leader Should Know

R&D leaders and scientists need a working understanding of key AI concepts so they can more effectively develop future-forward data strategies and lead the charge...

Why A Data Fabric is Essential for Modern R&D

Scattered and siloed data is one of the top challenges slowing down scientific discovery and innovation today. What every R&D organization needs is a data...

Jupyter AI Magics Are Not ✨Magic✨

It doesn’t take ✨magic✨ to integrate ChatGPT into your Jupyter workflow. Integrating ChatGPT into your Jupyter workflow doesn’t have to be magic. New tools are…

Top 5 Takeaways from the American Chemical Society (ACS) 2023 Fall Meeting: R&D Data, Generative AI and More

By Mike Heiber, Ph.D., Materials Informatics Manager Enthought, Materials Science Solutions The American Chemical Society (ACS) is a premier scientific organization with members all over…

Real Scientists Make Their Own Tools

There’s a long history of scientists who built new tools to enable their discoveries. Tycho Brahe built a quadrant that allowed him to observe the…

How IT Contributes to Successful Science

With the increasing importance of AI and machine learning in science and engineering, it is critical that the leadership of R&D and IT groups at...